# ZFS (Ubuntu)

ZFS unlike most other storage systems, it unifies both of these roles and acts as both the volume manager and the file system. Therefore, it has complete knowledge of both the physical disks and volumes - wikipedia

ZFS – baked directly into Ubuntu – supported by Canonical.

- What does “support” mean?

- You’ll find zfs.ko automatically built and installed on your Ubuntu systems. No more DKMS-built modules!

- You’ll see the module loaded automatically if you use it.

- The user space zfsutils-linux package will be included in Ubuntu Main, with security updates provided by Canonical.

The Basics

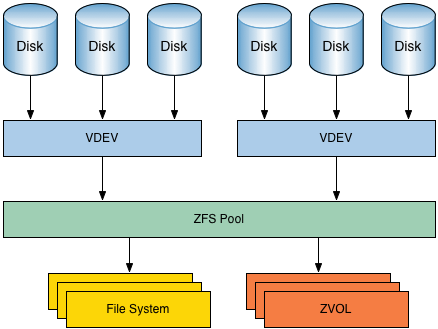

ZFS pool and thus your file system is based on one or more VDEVs. And those VDEVs contain the actual hard drives.

Fault-tolerance or redundancy is addressed within a VDEV. A VDEV is either a RAID-1 (mirror), RAID-5 (RAIDZ) or RAID-6 (RAIDZ2). It can even use tripple parity (RAID-Z3).

So it’s important to understand that

- a ZFS pool itself is not fault-tolerant.

- If you lose a single VDEV within a pool, you lose the whole pool.

- You lose the pool, all data is lost.

Now it’s very important to understand that you cannot add hard drives to a VDEV. (not yet see RAIDz expansion will be a thing very soon).

- Plan your ZFS Build with the VDEV limitation in mind

Many home NAS builders use RAID-6 (RAID-Z2) for their builds, because of the extra redundancy. This makes sense because a double drive failure is not something unheard of, especially during rebuilds where all drives are being taxed quite heavily for many hours.

Maintenance

Check Data Integrity (scrub)

zpool scrub <rpool> # start

zpool scrub -s <rpool> # stop

sudo zpool status

cd /storage_pool

df -h .Replacing a (silently) failing disk in a ZFS pool

Configuration

see also ZFSBootMenu

1 - Pool - RAID5 / Z1

Create the VDEV (storage) then the pool.

zpool create storage_pool raidz1 /dev/sda /dev/sdb /dev/sdc

zpool status

cd /storage_pool

df -h .2 - Create ZFS Filesystem (or dataset)

At this point, we now have a zpool spanning three disks. One of these is used for parity, giving us the chance to recover in the event of a single disk failure. The next step is to make the volume usable and add features such as compression, encryption or de-duplication.

Multiple ZFS file systems/dataset can be created on a single pool, the storage of the zpool with be available to any dataset as it requires it.

ZFS file systems can be created and destroyed by using the zfs create and zfs destroy commands. ZFS file systems can be renamed by using the zfs rename command.

zfs create -o mountpoint=[MOUNT POINT] [ZPOOL NAME]/[DATASET NAME]

zfs create -o mountpoint=/mnt/binaries storage_pool/binaries

zfs list # Test the datasets have been created withRenaming a ZFS File System

With the rename subcommand, you can perform the following operations:

- Change the name of a file system.

- and/or Relocate the file system within the ZFS hierarchy

zfs rename tank/home/kustarz tank/home/kustarz_old # rename

zfs rename tank/home/maybee tank/ws/maybee # relocate (mv)When you relocate a file system through rename, the new location must be within the same pool and it must have enough disk space to hold this new file system. If the new location does not have enough disk space, possibly because it has reached its quota, rename operation fails.

Changing FS Mount Point

To change the mount point of the filesystem techrx/logs to /var/logs, you must first create the mount point (just mkdir a directory) if it does not exist, and then change it

mkdir /var/logs

zfs set mountpoint=/var/logs techrx/logsZFS Event Daemon (ZED)

What ZED does that is so great is that it provides a very simple way to take action when ZFS events happen. - In praise of ZFS On Linux’s ZED

ZFS RAIDZ expansion

Expanding a RAIDZ Pool

With ZFS, you either have to buy all storage you expect to need upfront, or you will be wasting a few hard drives on redundancy you don’t need. - You can’t add hard drives to a VDEV

- github WP When available ZFS RAIDZ expansion would let adding a new disk to existing vdev with rebalancing. video

Ref

- ubuntu

- ZFS Concepts and Tutorial

- Never ever use hardware RAID-controller with ZFS.

- Error Correcting RAM (ECC) is recommended, but not mandatory

- Data deduplication feature consumes a lot memory, use compression instead.

- Data redundancy is not an alternative for backup. Have multiple backups, store those backups using ZFS!

- Configuring ZFS Cache for High Speed IO

- The ZFS ZIL and SLOG Demystified

- sync mode - sync=disabled speed up a lot file transfer (see ZIL safety)

- zfs compression

- zfs properties

zfs get all tank/home